Automating Website Maintenance: How I Created a Broken Link Monitoring System

In the hustle of digital marketing, SEO and marketing teams are constantly juggling a million things—content creation, optimization, campaign management, analytics—you name it. With so many tasks on their plate, regular website maintenance, especially checking for broken links, often gets pushed to the back burner. As a result, these pesky broken links can slip through the cracks for weeks or even months, piling up and quietly chipping away at your site’s performance.

Broken links aren’t just an annoyance for users—they’re also bad news for your SEO. They mess with your site’s link equity and send all the wrong signals to search engines, which can tank your visibility and rankings over time. For teams that are already stretched thin, finding the time for regular link audits feels next to impossible, and manual checks just aren’t practical, particularly for larger sites.

That’s why I decided to build an automated system to handle broken link monitoring. In this case study, I’ll walk you through how I set up an automated process that keeps our site in check, catches issues before they spiral, and takes the pressure off the team. By automating this piece of website maintenance, we’ve managed to keep things running smoothly without the constant need for manual intervention.

The problem

Manually checking for broken links is a hassle—tedious, time-consuming, and often pushed aside as teams get caught up in other priorities. I learned this the hard way when I was the first in my team to run a broken link audit in over two years. What I found was eye-opening: a crucial call-to-action (CTA) in our educational section had been broken the entire time. This section was supposed to convert engaged readers into paying customers, but instead, thousands of users hit a dead end. Not one converted. That broken CTA wasn’t just a small mistake; it was a huge missed opportunity and a painful reminder of how costly these errors can be when regular checks fall through the cracks.

This experience underscored a bigger issue: manual checks just aren’t cutting it, especially for larger websites or busy teams with limited resources. What’s needed is a more efficient and reliable approach—a way to catch and fix broken links before they snowball into bigger problems. That’s where automation comes in, providing a systematic way to identify and resolve broken links quickly, protecting both your user experience and your SEO efforts.

The objective

The goal was pretty straightforward: build an automated system that keeps tabs on our website for broken links and gives us a heads-up when something’s off. Instead of always playing catch-up and fixing issues after they’ve already caused damage, we wanted to catch errors as they arise and correct them before they could hinder the website performance. This would keep the site in good shape without having to constantly rely on manual checks. No more big missed opportunities, no more dead ends for users.

But it wasn’t just about spotting broken links. We had a few other key objectives in mind:

Boosting Website Health Scores: Fixing broken links regularly helps keep the site healthy, which is a big deal for search engines. A cleaner site means better rankings, more visibility, and an overall stronger digital presence.

Enhancing User Experience: Few things annoy visitors more than landing on a broken link. By making sure all our links are working, we’re not just saving users from frustration—we’re keeping them engaged and encouraging them to explore more of our content, which ultimately leads to more conversions.

Maintaining SEO Integrity: Broken links mess with the flow of link equity. My automated system was designed to keep that flow intact, ensuring our SEO efforts aren’t wasted and our hard-earned “link juice” continues to do its job.

In the end, this project wasn’t just about fixing broken links; it was about creating a smarter, more efficient way to keep our website running smoothly. Automating this part of our maintenance means the team can focus on doing what we do best without fear that broken links are slipping through the cracks.

Planning: figuring out what we needed

Before jumping into building the solution, we had to figure out what we really needed: a system that could automatically keep an eye on our website for broken links and alert us when something went wrong. Manual checks were out of the question—they’re way too time-consuming, prone to mistakes, and just not practical for bigger sites. Automation was the obvious answer. We wanted something that could run in the background, doing its thing without needing constant supervision, so we could focus on more strategic work.

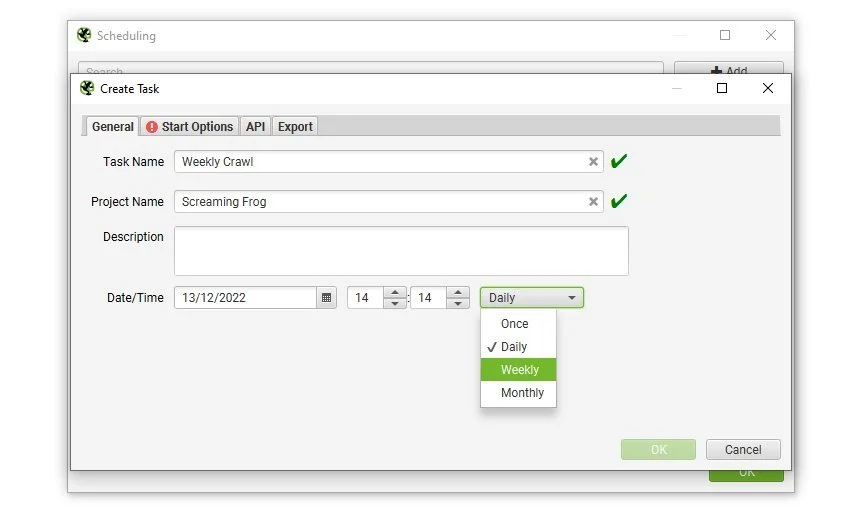

The main requirement was to set up a daily crawl of the website to catch any broken links. For this, we used Screaming Frog, a well-known SEO crawling tool that’s perfect for the job, especially with its headless mode that lets you run automated tasks without lifting a finger. I set up Screaming Frog to do its crawl every day and export the data on broken links directly into a designated folder in my Google Drive called “Broken Link Audit.” Linking Screaming Frog to Google Drive was easy—just follow the prompts, pick the folder you want for your exports, and you’re good to go. Each day, you’ll see a new folder with that day’s date (e.g., “22.08.2023”) pop up in Google Drive with all the broken link data inside.

The next challenge was figuring out how to compare these daily exports and get notified when things went south. I went with MAKE.com (formerly Integromat) because it’s super intuitive, budget-friendly, and fit our needs perfectly, though you could use other tools like Zapier if you prefer. MAKE.com works on a module system, where each module represents a step in your logic—kind of like building blocks.

Here’s the basic idea: every day, MAKE.com grabs the latest broken link data from Google Drive and compares it to the data from the day before. If the system spots a spike in broken links or catches any critical errors, it shoots an alert straight to our team’s Slack channel. This way, we’re not just collecting data but actively using it to catch issues as they happen.

Building the Automated Broken Link Monitor

Putting together the broken link monitoring system was all about following a clear, step-by-step process to make sure we got the data right and set up the automation accurately. A key thing to remember is that Screaming Frog’s export data is always structured the same way—origin URLs, destination URLs, and error codes are consistently found in the same columns, which makes it easier to work with once you know what you’re looking for.

Here’s a breakdown of how I set up the automated monitor, covering each stage of data prep and the logic needed to make it all work. Let’s dive in!

Stage 1: Eliminating Irrelevant Data

The first task was to clean up the data we got from Screaming Frog by removing the stuff that was deemed “out of scope”. The export file includes all sorts of URLs and site elements, but not everything in there is useful for monitoring broken links. We had to trim it down to avoid any clutter that could mess with our analysis.

Types of Irrelevant Data:

HTTP Redirects

XML Sitemap entries

Sitemap Hreflang tags

HTML Hreflang tags

JavaScript files

PDFs

What we really needed to focus on were the “Hyperlinks” listed in Column A. By filtering down to this specific category, we cut out the noise and zeroed in on the links that could actually affect the user experience and SEO. This made the dataset much cleaner and easier to work with as we moved on to the next steps.

Stage 2: Narrowing Down to Relevant Broken Links

Next, we zoomed in on the broken links that actually mattered. Screaming Frog conveniently categorizes broken links by their status codes in Column G, making it easy to sort through the data. For this project, we were laser-focused on the most critical issues:

Irrelevant Status Code: “403” (Forbidden) links weren’t our focus for this exercise since they don’t typically impact user flow in the same way.

Relevant Status Code: “404” (Not Found) links were our main concern because they indicate that the target page couldn’t be found, directly affecting user experience and SEO.

By filtering down to just the 404 errors, we made sure our monitoring system was locked in on the most impactful broken links, cutting out any unnecessary noise.

Stage 3: Categorizing URLs by Website Area

To make the monitoring system more effective, we needed to categorize the URLs based on their importance within the website. This helped us prioritize which broken links required immediate action. We broke the URLs into different groups to reflect their significance, like top-ranking pages, high-converting pages, and key transactional areas.

Step 3.1: Identifying Site-Wide Links

First up were the site-wide links—these are URLs that pop up frequently across the entire site, like in navigation menus or footers. To spot these, I filtered Column C to find links that showed up more than 200 times, indicating they were site-wide. It’s key to focus on the destination URL here because the source URLs are spread all over the site.

The 200 times threshold was something I chose arbitrarily. You can easily define a higher or lower threshold, to reflect the size of your website.

Step 3.2: Filtering by Specific Website Areas

Next, we used URL-based identifiers in Column B to break the data into specific website sections:

Blog Links: Spotted by URLs containing “blog.websitedomain.com.”

Academy Links: Identified by URLs with “websitedomain.com/academy.”

General Website Links: Any URLs that didn’t fall into the above categories, using more detailed identifiers (like “/en,” “/de,” etc.) to make sure no pages slipped through the cracks.

Step 3.3: Further Segmenting Website Links

Within the general “Website” category, we dug deeper to focus on the most critical areas:

Support Pages: Often hosted on different platforms, these were found using destination URLs in Column C (e.g., “support.websitedomain.com”). Although marketing doesn’t usually manage these directly, we still wanted alerts for significant changes so we could notify the right teams.

Price Pages: These are the bread and butter—the key transactional pages that drive revenue. Identified by URLs like “/en/prices/,” these links were top priority. Any spike in broken links here would trigger an immediate alert because of their direct impact on sales. The threshold for these needs to be very low - such as any increase higher than 2%. You want to know immediately when anything goes wrong with these pages.

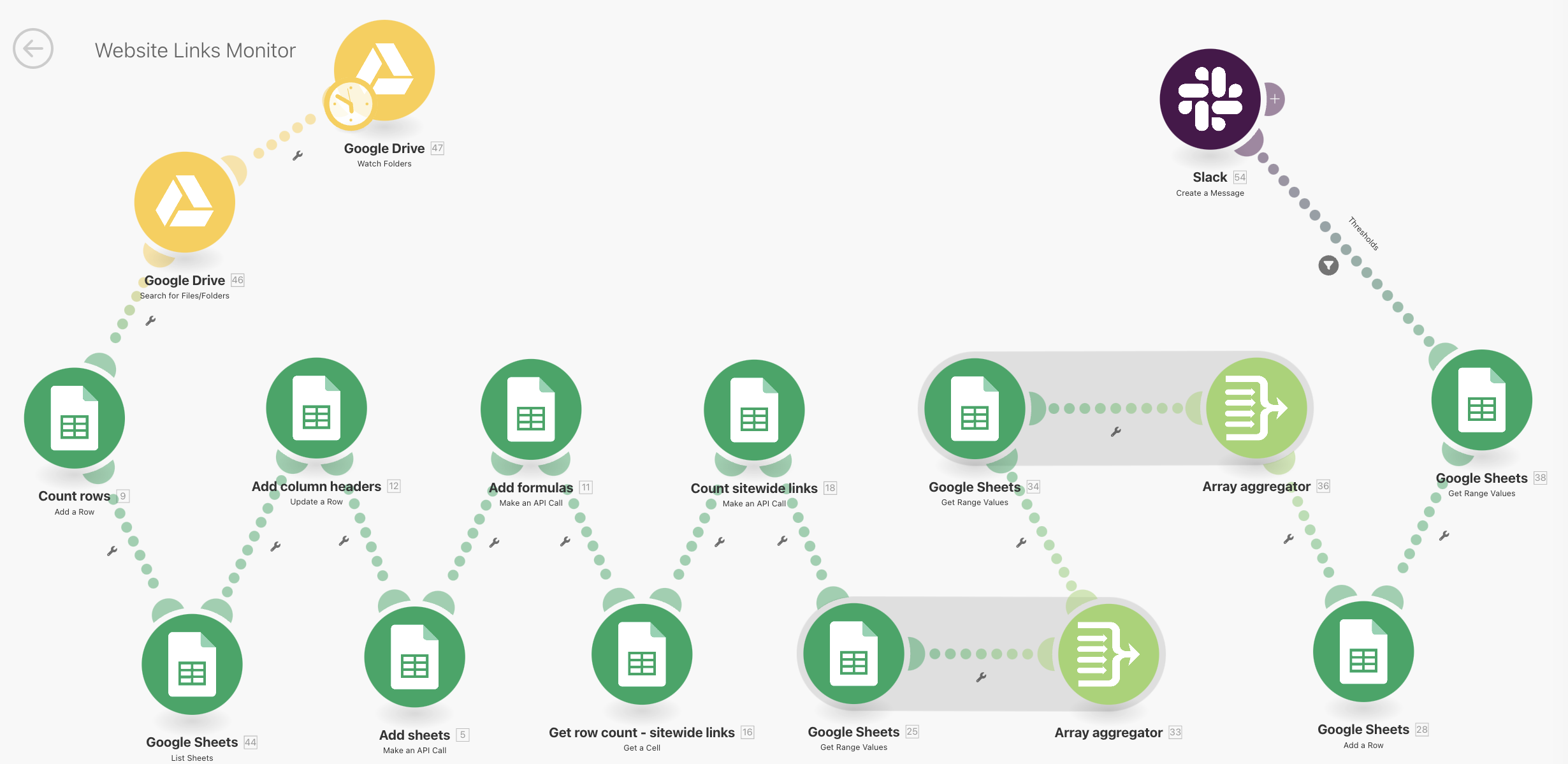

Stage 4: Creating the Logic in MAKE (Integromat)

With your data organized and ready, the final step is setting up the logic in MAKE.com to automate the analysis, categorization, and comparison of broken link data. This is where things get a bit more technical, but following these steps will help you build a powerful system to streamline your website maintenance.

Bear in mind that this is specific to the MAKE tool, though the set up would be fairly similar for other tools such as Zapier. Those modules may have slightly different names and implications though.

Google Drive - Watch Folders Module

This module keeps an eye on your specified Google Drive folder (like “Broken Link Audit”) for new files every day. When Screaming Frog finishes its crawl and saves the export file, this module detects the new folder with the day’s data export and triggers the automation process.Google Drive - Search for Files/Folders Module

This module locates the exact file within the new folder, ensuring the system grabs the correct broken link data export each day. Set this up to retrieve files whenever a new folder is added with the daily crawl. Screaming Frog’s export is consistently named, so set your search query to “client_error_(4xx)_inlinks” with the search option set to “exact term” to match it perfectly.Count Rows - Google Sheets (Add a Row) Module

This module counts the rows in your Google Sheet, helping track the volume of data being processed. It identifies whether any new data has been added and ensures the automation handles the right amount each day.Add Column Headers - Google Sheets (Update a Row) Module

This module ensures that all data columns in your Google Sheet are properly labeled by adding or updating headers. Correct headers are crucial since they define the data points that the rest of the modules will analyze.Add Formulas - Google Sheets (Make an API Call) Module

This module uses API calls to add formulas to your Google Sheet, automating calculations like summing up broken links per category or comparing today’s data to the previous day’s totals.Count Sitewide Links - Google Sheets (Make an API Call) Module

This module counts how often sitewide links appear in the data—think links that show up in nav bars or footers across the site. It’s key for categorizing links that have a broader impact.Get Row Count - Sitewide Links - Google Sheets (Get a Cell) Module

This module pulls the count of sitewide links from specific cells where this data is recorded, helping the system track how many are monitored each day.Google Sheets - Get Range Values (Get Range Values) Module

This module extracts data from a set range in the Google Sheet, pulling in all the info needed for comparison, including the categorized totals of broken links.Array Aggregator Modules

These modules group totals from each category, prepping them for comparison. Aggregated data helps determine whether counts have gone up or down compared to the previous day.Comparison Logic in Google Sheets - Get Range Values

This module checks today’s data against yesterday’s in the summary sheet. Here, you set thresholds that dictate when alerts should be sent—whether for a spike in broken links on key pages or significant changes across other categories.Slack - Create a Message Module

If the comparison detects that broken links exceed your defined thresholds, this module sends an alert straight to your team’s Slack channel. It makes sure the right people get notified instantly so they can jump on the most urgent issues.

Challenges Faced

One of the biggest technical challenges was dealing with the sheer volume of data that the broken link export from Screaming Frog generates. Most of the data wasn’t directly relevant to our monitoring needs, but if I let MAKE.com analyze every single entry, the automation would become ridiculously expensive. To tackle this, we used a single API call that specifically pulled only the data we needed to analyze, cutting down on unnecessary processing.

I have to give credit where it’s due—this API wasn’t something I whipped up myself. My incredibly talented colleague, who specializes in marketing automation, developed it based on the list of URL categories and identifiers I provided. By consolidating each step into a single API call, we kept the process efficient and minimized costs, using just one credit per action instead of thousands. Without his expertise, this setup wouldn’t have been possible.

Another key challenge was ensuring the system was accurate and reliable. Any oversight in categorizing pages could lead to the wrong thresholds being applied, which is risky. If an important page group gets overlooked, you might end up with a threshold that’s too lenient, meaning alerts won’t trigger soon enough. This could result in the team being notified too late, after the damage from broken links has already started to hurt user experience and conversions—defeating the whole purpose of the monitor.

To reduce the chances of this happening, we fine-tuned the system by setting specific rules for retries and excluding temporary errors that didn’t need immediate attention. We also continuously reviewed the page categories to make sure everything important was being tracked correctly, allowing us to catch issues before they snowballed into bigger problems.

Results and Impact

Since launching the broken link monitor, our automation has sent several timely alerts directly to our Slack channel, flagging upticks in broken links that could have otherwise gone unnoticed. We caught issues ranging from incorrect URLs in the main navigation, which caused site-wide broken links, to errors in the modules on transactional pages, and even some classic human errors in redirect setups. Each time an alert came in, we investigated immediately, tracked down the root cause, and resolved the issue quickly. Not once did these errors have a chance to build up, meaning the overall performance of the site was always protected.

Thanks to this proactive approach, our website’s health scores have remained consistently high, bounce rates have stayed under control, and we’ve avoided any dips in user experience that might have come from lingering broken links.

Before automating this process, manual checks for broken links were a massive time sink, and honestly, they were never done as regularly as needed. Now, no one on the team has to spend their time hunting down broken links; the monitor does it all for us. It’s a significant time saver that has allowed us to focus on more impactful tasks without worrying about the health of our website slipping through the cracks. Every alert we’ve received has led to quick fixes, keeping our site running smoothly without the usual manual grind.

Conclusion

Automating the broken link monitoring process has been a game-changer, not just for our website’s health but for how we approach repetitive tasks across the board. By taking this tedious task off our hands, the monitor has ensured that broken links are caught and fixed quickly, keeping our site performing at its best and allowing the team to focus on more impactful work. This project has exposed me to a whole new world of marketing automation, from diving into the technical aspects of API writing to discovering just how powerful MAKE.com can be.

What started as a solution for our own needs has now become an inspiration for other teams in the marketing department. They’ve begun using MAKE to streamline their processes, driving efficiency gains across the company. It’s a project I’m incredibly proud of, as it not only solved a persistent problem but also sparked a wider shift towards smarter, more automated workflows.

If you’re dealing with repetitive tasks that drain your team’s time and energy, consider how automation could make a difference. It’s not just about saving time; it’s about creating a more proactive and efficient way of working that keeps everything running smoothly.